Bufang Yang, Yunqi Guo, Lilin Xu, Zhenyu Yan, Hongkai Chen, Guoliang Xing, and Xiaofan Jiang. Socialmind: Llm-based proactive ar social assistive system with human-like perception for in-situ live interactions. In Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, 2025

AI for Health

Our laboratory is at the forefront of integrating AI into healthcare, focusing on areas such as chronic disease monitoring, including Alzheimer’s disease, enhancing medical diagnosis with large language models, and modernizing Traditional Chinese Medicine. These initiatives strive to create scalable and ethical AI solutions that enhance patient outcomes, broaden access, and tailor care on a global scale.

- SenSys '25

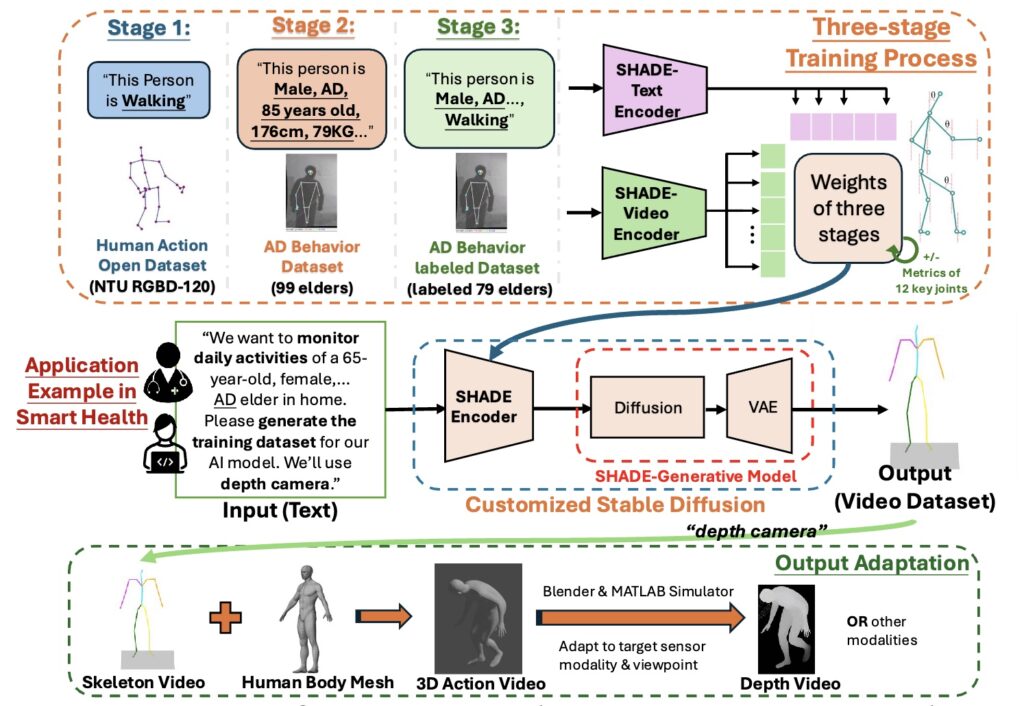

SHADE-AD, a Large Language Model (LLM) framework for Synthesizing Human Activity Datasets Embedded with AD features. Leveraging both public datasets and our own collected data from 99 AD patients, SHADE-AD synthesizes human activity videos that specifically represent AD-related behaviors.

- MobiCom '24

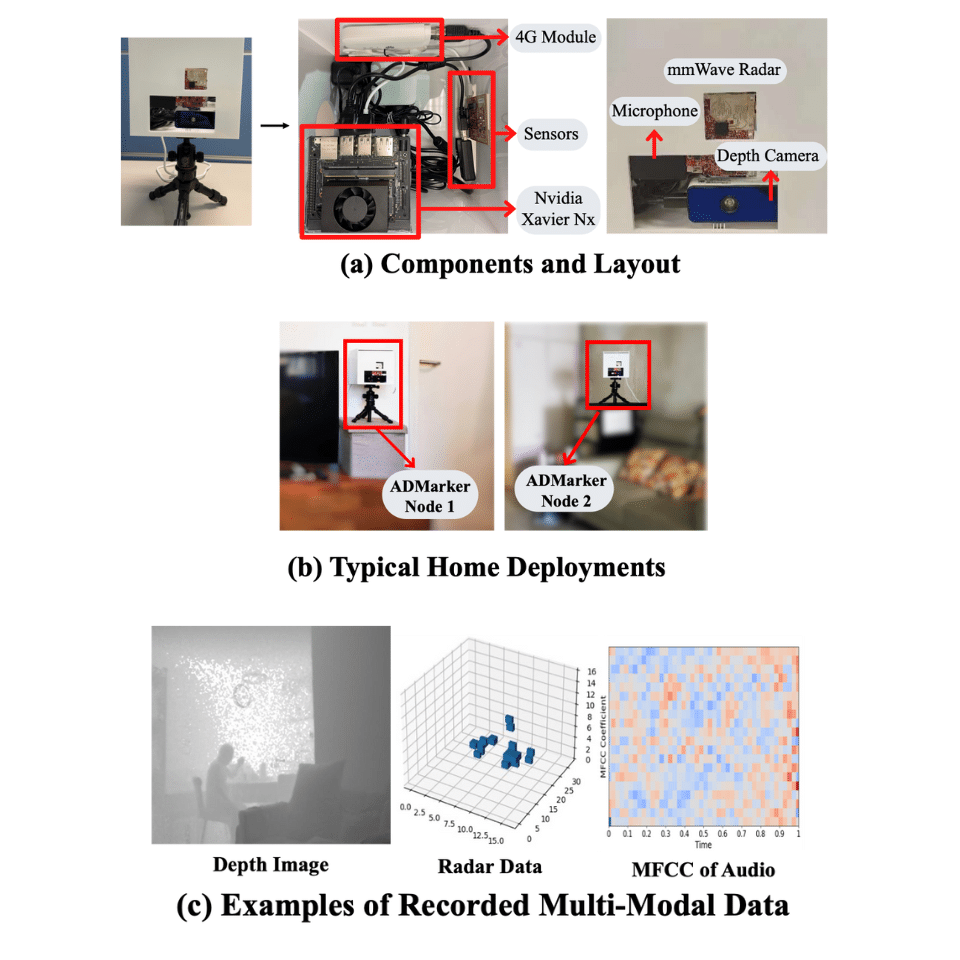

ADMarker offers a new platform

that can allow AD clinicians to characterize and track the

complex correlation between multidimensional interpretable

digital biomarkers, demographic factors of patients, and AD

diagnosis in a longitudinal manner.

- IMWUT '24

DrHouse introduces a novel diagnostic algorithm that concurrently evaluates potential diseases and their likelihood, facilitating more nuanced and informed medical assessments.

- MobiSys '23

Harmony, a new system for heterogeneous multi-modal federated learning. Harmony disentangles the multi-modal network training in a novel two-stage framework, namely modality-wise federated learning and federated fusion learning.

- MobiCom '22

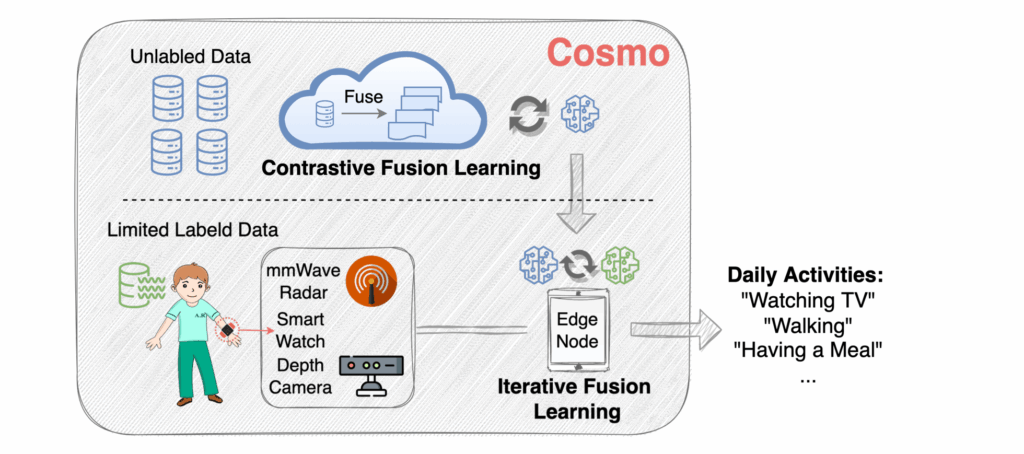

Cosmo features a novel two-stage training strategy that leverages both unlabeled data on the cloud and limited labeled data on the edge. By integrating novel fusion-based contrastive learning and quality-guided attention mechanisms, Cosmo can effectively extract both consistent and complementary information across different modalities for efficient fusion.

- MobiSys '21

ClusterFL can efficiently drop out the nodes that converge slower or have little correlation with other nodes in each cluster, significantly speeding up the convergence while maintaining the accuracy performance

Autonomous Driving

Our research in autonomous driving utilizes real-time AI and smart roadside infrastructure to advance systems like Soar, which integrates software and hardware for comprehensive support. The αLiDAR System enhances LiDAR sensors with adaptive scanning capabilities. VILAM leverages infrastructure for precise 3D localization and mapping, correcting vehicle map errors. VI-Map maintains accurate HD maps by merging roadside and on-vehicle data in real-time. These innovations collectively boost precision and reliability in autonomous driving.

MobiCom ’21

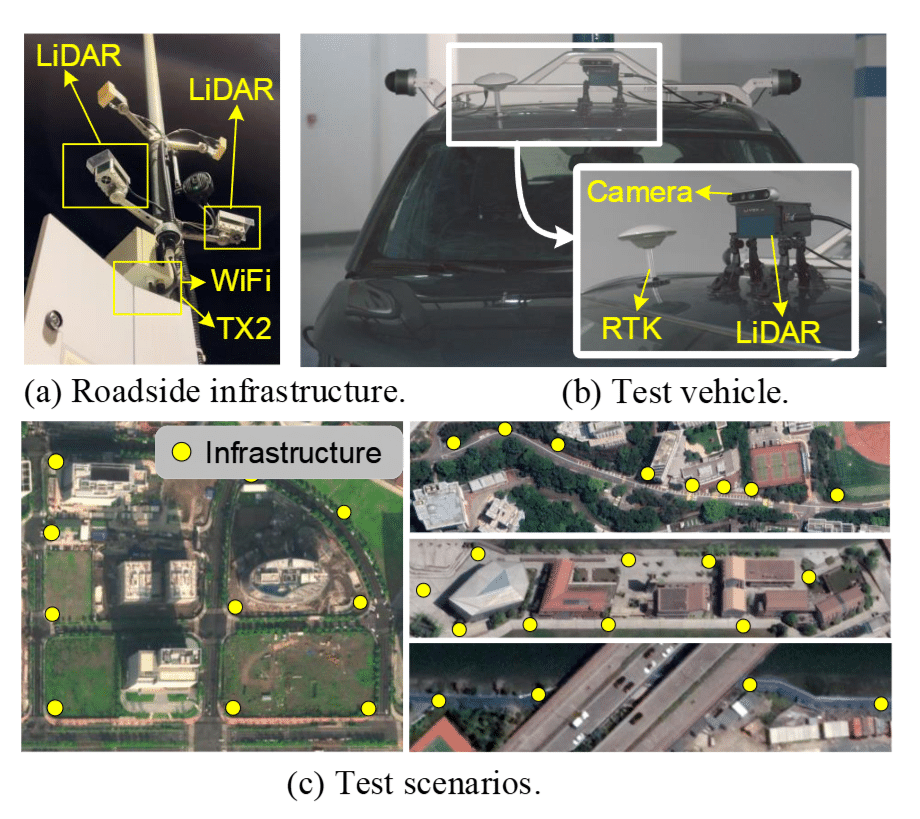

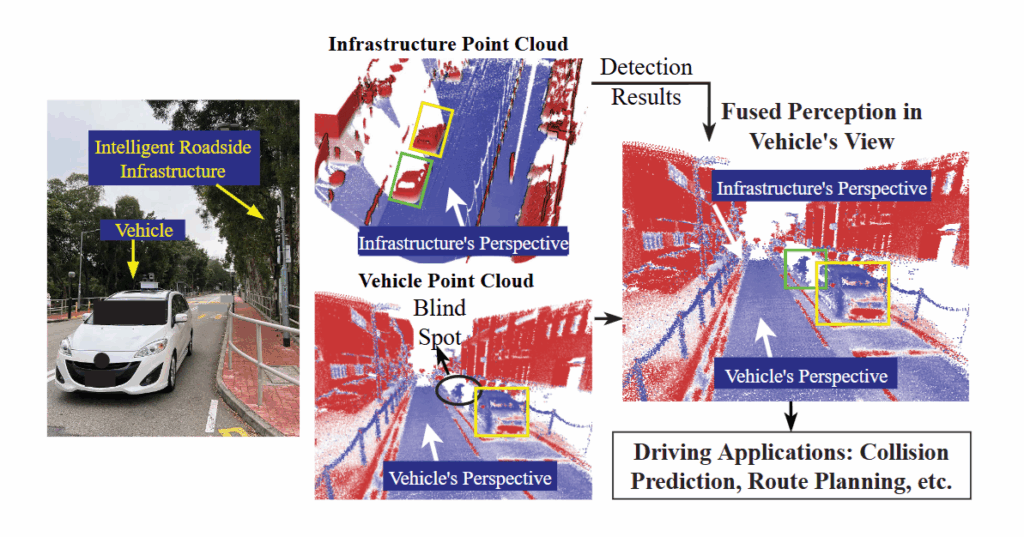

In this paper, we present VI-Eye, the first system that aligns vehicleinfrastructure point cloud pairs at centimeter accuracy in real-time,

which enables a broad range of on-vehicle autonomous driving applications. Evaluations on the two self-collected datasets show

that VI-Eye outperforms state-of-the-art baselines in accuracy, robustness and efficiency

MobiCom ’22

In this paper, we present VIPS, a novel system that fuses the objects detected by the vehicle and the infrastructure to expand the

vehicle’s perception in real time, which facilitates a number of autonomous driving applications. We implement VIPS end-to-end and evaluate its performance on two self-collected datasets. The experiment results show that VIPS outperforms the existing approaches in accuracy, robustness, and efficiency.

🏆Best Paper Award Runner-Up

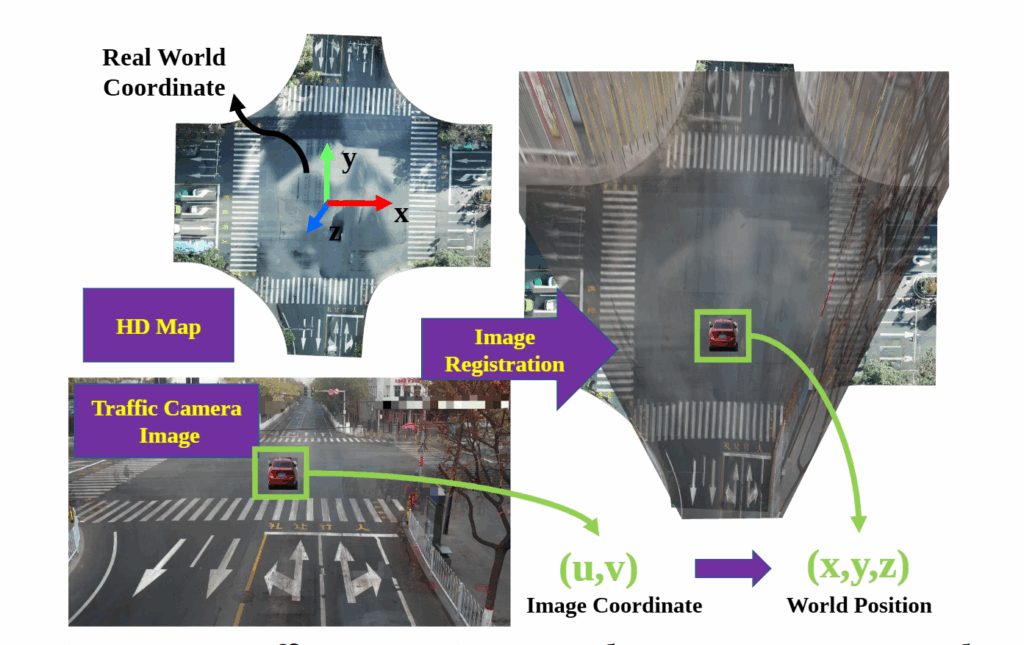

SenSys ’22

This paper present AutoMatch, the first system that matches traffic camera-vehicle image pairs or traffic camera-HD map image pairs at pixel-level accuracy with low communication/compute overhead in real-time, which is a key technology for leveraging traffic camera for assisting the perception and localization of autonomous driving. We conduct extensive evaluations on two selfcollected datasets, which show that AutoMatch outperforms SOTA baselines in robustness, accuracy, and efficiency.

- MobiCom ' 24

The core concept of αLiDAR is to expand the operational freedom of a LiDAR sensor through the incorporation of a controllable, active rotational mechanism. This modification allows the sensor to scan previously inaccessible blind spots and focus on specific areas of interest in an adaptive manner.

- MobiCom '24

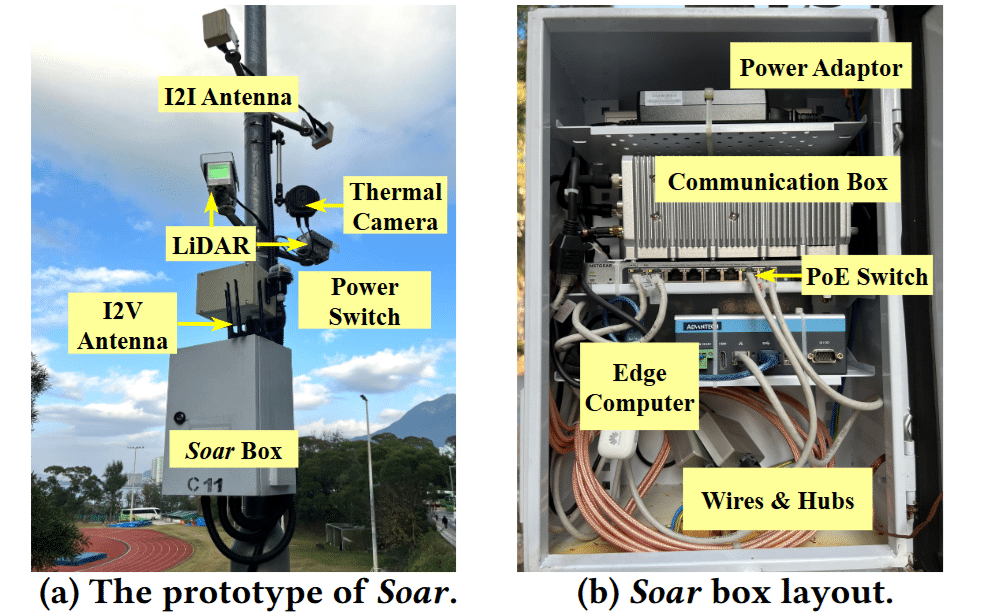

Soar, the first end-to-end SRI system specifically designed to support autonomous driving systems. Soar consists of both software and hardware components carefully designed to overcome various system and physical challenges.

- NSDI '24

VILAM,

a novel framework that leverages intelligent roadside infrastructures to realize high-precision and globally consistent

localization and mapping on autonomous vehicles. The key

idea of VILAM is to utilize the precise scene measurement

from the infrastructure as global references to correct errors

in the local map constructed by the vehicle.

- MobiCom '23

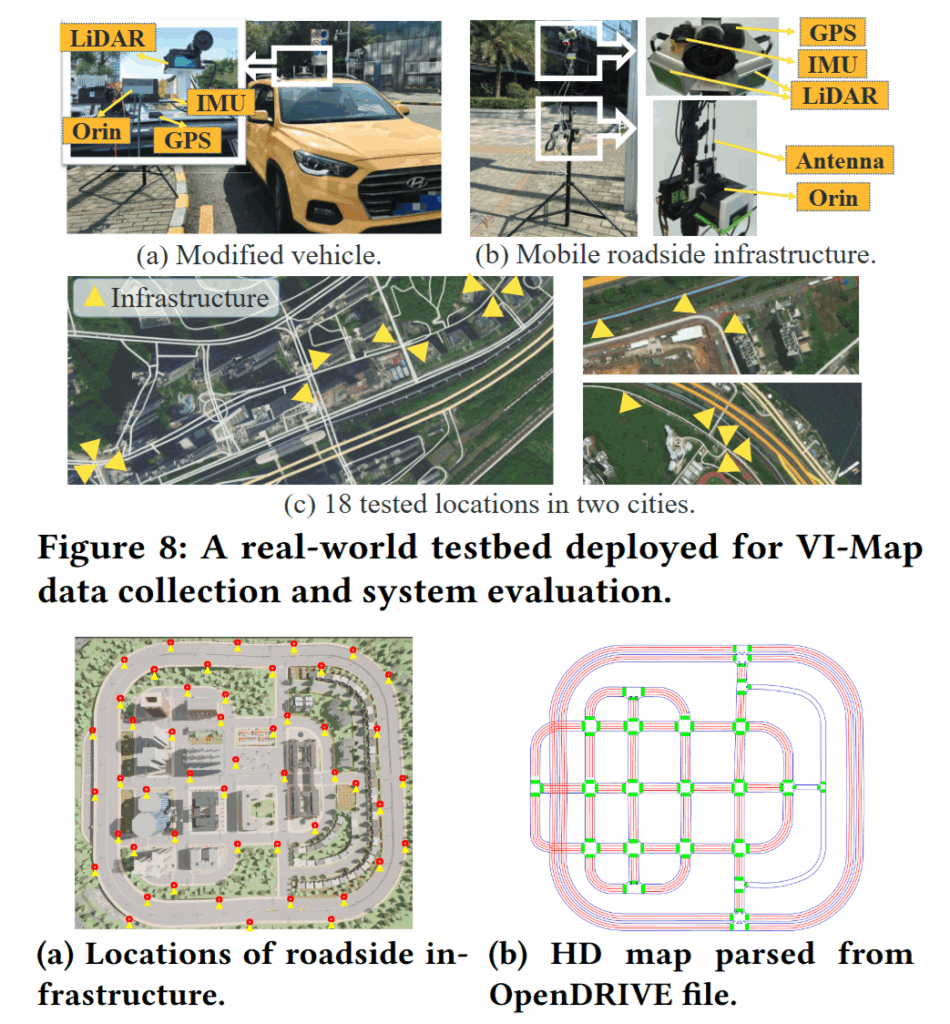

The core concept of VIMap is to exploit the unique cumulative observations made

by roadside infrastructure to build and maintain an accurate and current HD map. This HD map is then fused with

on-vehicle HD maps in real time, resulting in a more comprehensive and up-to-date HD map.

- MobiCom '22

VIPS, a novel lightweight system that can achieve decimeter-level and real-time (up to 100 ms) perception fusion between driving vehicles and roadside infrastructure. The key idea of VIPS is to exploit highly efficient matching of graph structures that encode objects’ lean representations as well as their relationships, such as locations, semantics, sizes, and spatial distribution.

MobiCom ’23

In this paper, we present VI-Map, the first system that utilizes the unique advantages of roadside infrastructure to enhance on-vehicle HD maps by providing accurate and

timely infrastructure HD maps. We have implemented VIMap end-to-end and the experimental results show that VIMap enhances existing HD mapping methods in terms of map geometry accuracy, map topology freshness, system robustness, and efficiency.

🏆Best Community Contribution Award

MobiCom ’24

This paper presents the design and deployment of Soar, the first end-to-end SRI system specifically designed for supporting AVs. Soar consists of carefully designed components for data and DL task management, I2I and I2V communication, and an integrated hardware platform, which addresses a multitude set of system and physical challenges, allows to leverage the existing operational traffic infrastructure, and hence lowers the barrier of adoption.

🏆Best Artifact Award Runner-up

NSDI ’24

In this paper, we propose VILAM, a novel framework that leverages intelligent roadside infrastructures to realize high-precision and globally consistent localization and mapping on autonomous vehicles. The key idea of VILAM is to utilize the precise scene measurement from the infrastructure as global references to correct errors in the local map constructed by the vehicle.

Embedded ML/LLM Systems

Our research in embedded ML/LLM systems aims to enhance the functionality and integration of sensor systems. We develop technologies that translate sensor capabilities and data dependencies into vocabularies and grammar rules for large language models, allowing for the conversion of user intentions into executable task plans. Additionally, we leverage foundation models for open-set learning on the edge, improving adaptability and performance. These innovations collectively enhance the efficiency and effectiveness of embedded systems through advanced AI integration.

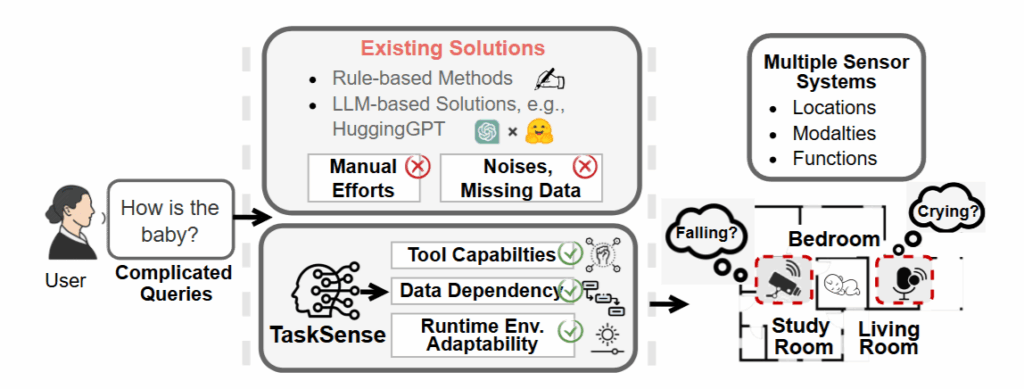

- SenSys '25

TaskSense introduces a sensor language that automatically translates the capabilities and data dependencies of sensor systems into vocabularies and

grammar rules that can be understood by LLMs. It then interprets

user intentions into executable task plans for sensor systems using this sensor language in combination with LLMs.

- MobiCom '24

Asteroid, a distributed edge training system that breaks the resource walls across heterogeneous edge devices for efficient model training acceleration. Asteroid adopts a hybrid pipeline parallelism to orchestrate distributed training, along with a judicious parallelism planning for maximizing throughput undercertain resource constraints.

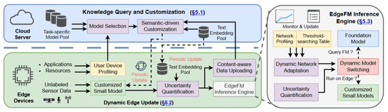

- SenSys '23

EdgeFM, a novel edge-cloud cooperative system with open-set recognition capability. EdgeFM selectively uploads unlabeled data to query the FM on the cloud and customizes the specific knowledge and architectures for edge models. Meanwhile, EdgeFM conducts dynamic model switching at run-time taking into account both data uncertainty and dynamic network variations, which ensures the accuracy always close to the original FM.

On-Device Deep Learning

SenSys ’21 ’22, HotMobile ’23

Real-time deep learning (DL) on edge devices faces challenges like high computational demands, diverse task requirements, and limited framework support. This project explores DL task scheduling, model scaling, and latency-accuracy trade-offs to optimize performance for resource-constrained platforms. The goal is to enable efficient on-device DL execution for applications like autonomous driving while meeting real-time constraints.

🏆Best Paper Finalist

Edge-Cloud Cooperation

IPSN ’23, IoTDI ‘21, Sensys ‘23

Deep learning models on IoT devices face challenges in generalizing across diverse environments due to limited resources, despite advancements in algorithms and hardware. Foundation models (FMs) offer strong generalization, but leveraging their knowledge on resource-constrained edge devices remains unexplored.

Mobile Sensing

Our research in mobile sensing focuses on developing innovative systems that enhance perception and interaction in challenging environments. We have created systems that leverage advanced technologies such as multi-modal sensors, mmWave radars, and Time-of-Flight (ToF) cameras. These systems enable human-like perception for social assistance, egocentric human mesh reconstruction, and high-resolution sensing in low-light conditions. By addressing limitations like restricted sensing range, occlusion, and noise, our work significantly improves the capabilities and applications of mobile sensing technologies, offering real-time, low-cost, and high-performance solutions for next-generation applications.

- IMWUT '25

SocialMind, the first LLM-based proactive AR social assistive system that provides users with in-situ social assistance. SocialMind employs human-like perception leveraging multi-modal sensors to extract both verbal and nonverbal cues, social factors, and implicit personas, incorporating these social cues into LLM reasoning for social suggestion generation.

- SenSys '25

Argus, a wearable add-on system based

on stripped-down (i.e., compact, lightweight, low-power, limitedcapability) mmWave radars. It is the first to achieve egocentric human mesh reconstruction in a multi-view manner. Compared with

conventional frontal-view mmWave sensing solutions, it addresses

several pain points, such as restricted sensing range, occlusion, and

the multipath effect caused by surroundings.

- IPSN '24

ArtFL, a novel federated learning system designed to support dynamic runtime inference through multi-scale training. The key idea of ArtFL is to utilize the data resolution, i.e., frame resolution of videos, as a knob to accommodate dynamic inference latency requirements. Specifically, we initially propose data-utility-based multi-scale training, allowing the trained model to process data of varying resolutions during inference.

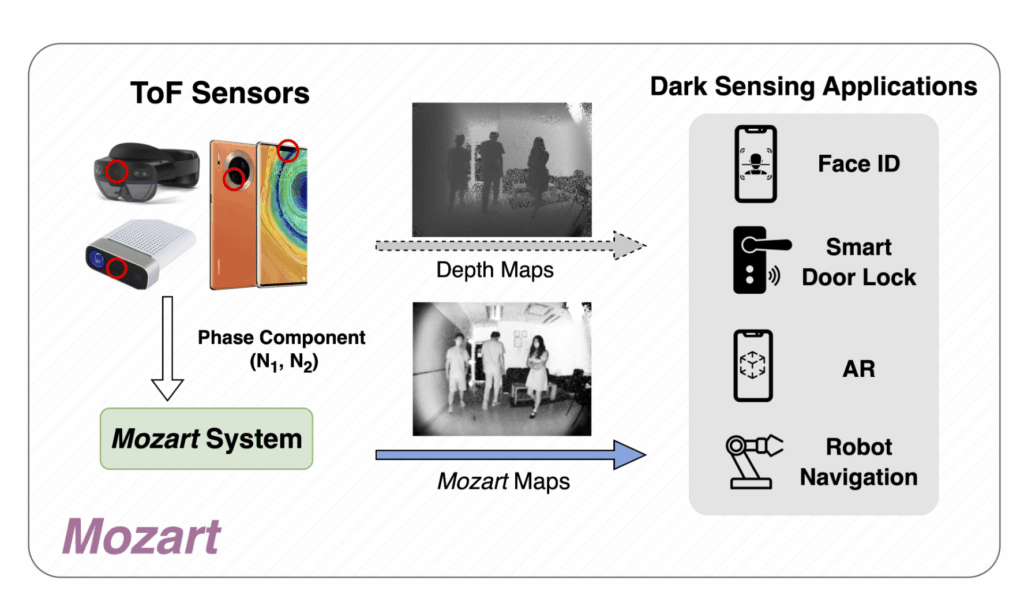

- MobiSys '23

Mozart is a breakthrough mobile sensing system using Time-of-Flight (ToF) cameras to generate high-resolution maps in dark environments. By innovatively manipulating ToF phase components, it enhances texture information. Implemented on Android devices, Mozart operates in real-time, providing a cost-effective, high-performance solution for advanced sensing in the dark.

Wireless Systems

Our research in wireless systems is driven by a vision to revolutionize wireless communication through cutting-edge technologies. We focus on developing advanced multi-path estimation algorithms like BeamSense and exploring NB-IoT power consumption with tools such as NB-Scope. Our direction involves applying these innovations to Wi-Fi devices and conducting extensive field measurements to push the boundaries of wireless technology.

- SIGCOMM '23

BeamSense adopts a novel multi-path estimation algorithm that can efficiently and accurately map bidirectional CBR to a multi-path channel based on intrinsic fingerprints. We implement BeamSense on several prevalent models of Wi-Fi devices and evaluated its performance with microbenchmarks and three representative Wi-Fi sensing applications.

- MobiCom '20

NB-Scope - the first hardware NB-IoT diagnostic tool that supports fine-grained fusion of power and protocol traces. We then conduct a large-scale field measurement study consisting of 30 nodes deployed at over 1,200 locations in 3 regions during a period of three months.

- MobiSys '13

LEAD, a new cross-layer solution to improve the performance of existing channel decoders. While the traditional wisdom of cross-layer design is to exploit physical layer information at upper-layers, LEAD represents a paradigm shift in that it leverages upper-layer protocol signatures to improve the performance of physical layer channel decoding. The approach of LEAD is motivated by two key insights.

On-Device Deep Learning

SenSys ’21 ’22, HotMobile ’23

Real-time deep learning (DL) on edge devices faces challenges like high computational demands, diverse task requirements, and limited framework support. This project explores DL task scheduling, model scaling, and latency-accuracy trade-offs to optimize performance for resource-constrained platforms. The goal is to enable efficient on-device DL execution for applications like autonomous driving while meeting real-time constraints.

🏆Best Paper Finalist

Edge-Cloud Cooperation

IPSN ’23, IoTDI ‘21, Sensys ‘23

Deep learning models on IoT devices face challenges in generalizing across diverse environments due to limited resources, despite advancements in algorithms and hardware. Foundation models (FMs) offer strong generalization, but leveraging their knowledge on resource-constrained edge devices remains unexplored.

Edge-Cloud Cooperation

IPSN ’23, IoTDI ‘21, Sensys ‘23

Deep learning models on IoT devices face challenges in generalizing across diverse environments due to limited resources, despite advancements in algorithms and hardware. Foundation models (FMs) offer strong generalization, but leveraging their knowledge on resource-constrained edge devices remains unexplored.

Wireless Systems

Recent years have witnessed an emerging class of real-time deep learning (DL) applications, e.g., autonomous driving, in which resource-constrained edge platforms need to execute a set of mixed deep learning algorithms concurrently.

Kaiwei Liu, Bufang Yang, Lilin Xu, Yunqi Guo, Guoliang Xing, Xian Shuai, Xiaozhe Ren, Xin Jiang, and Zhenyu Yan. TaskSense: A Translation-like Approach for Tasking Heterogeneous Sensor Systems with LLMs. In The 23rd ACM Conference on Embedded Networked Sensor Systems (Acceptance rate: 46/244=19%), 2025

Heming Fu, Hongkai Chen, Shan Lin, and Guoliang Xing, SHADE-AD: An llm-based framework for synthesizing activity data of Alzheimer’s patients. In The 23rd ACM Conference on Embedded Networked Sensor Systems (Acceptance rate: 46/244=19%), 2025

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.

Previous

Next